©2003 Robert Willey

The arrival of the MIDI specification and proliferation of personal computers in the 1980’s started a wave of development in music technology. The modularity of MIDI allows musicians to connect and configure equipment in provocative ways. Inserting a computer into a system provides more power and flexibility. Processors continued allowing software synthesis to be implemented in real time with programs like Csound and Reaktor, inspiring compositions that express themselves with new timbres. The most impressive growth has been in hard drive capacity, which combined with software development for more powerful computers has driven a migration towards digital audio workstations, replacing much of the technology of the traditional recording studio.

A less-explored opportunity created by technological developments is to design instruments to respond to performance gestures in new ways. This may be because most players work hard to learn to control their instruments and the last thing they want is to have the instrument have a new reaction, or worse, a variety of responses. When playing a Mozart piano sonata you want to hear the same note played every time you press a certain key. On the other hand, if you want to improvise or play untraditional music you might enjoy a different response. While the focus of this article is the development and application of “programmed instruments” to provoke players to improvise in fresh ways, the resources can also be useful for composers. If you John Cage’s idea of the “prepared piano” you may be tickled by this.

The examples shown here use a programming language called Max/MSP. For me, Max used to be the real reason for having a Macintosh. Now Cycling ’74 has created a cross platform version for PC's. It is an elegant and powerful environment in which to program MIDI and audio applications, and now an individual’s efforts can be packaged as plugins for use with big applications like Pro Tools and Digital Performer. Examples are given in this article of simple Max patches that change the relationship between input and output. If you have a Macintosh you can download them and play along ( http://willshare.com/willeyrk/creative/papers/programmable ).

We learn to perform by over-practicing instrumental skills. When it comes time to play for other people there is no longer time to consciously control every action. If we had to think about how to get to the notes and which finger to play each key we’d never be able to get through a complete phrase, let alone an entire piece in front of an audience. First, strong habits have to be developed that can be relied upon when the curtain goes up, especially if we’re nervous, sick, or tired. Licks, scales, chord progressions, and repertoire has to be rehearsed for years. After playing a C major scale thousands of times our finger is ready to play a “D” each time a “C” is played. We’re less likely to follow a “C” with a “C#” because of the habits that have been developed in our muscle memory. While a beginner has a hard time playing the same thing twice, a professional has an equally hard time playing something completely new.

Now some of us find times when we want to do just that. We’d like to improvise and play in a way we’ve never done before, but it’s keep our hands out of our bags of tricks, what have become comfortable and nearly automatic gestures we’ve mastered. One way to loosen up things is to change the way the instrument responds. A new response to a gesture wakes us up and makes us more alert to new possibilities, drawing us into experimentation. Even if we execute an old lick at least it will sound different. What follows are some examples of “programmable instruments”. I made up the term to differentiate the approach from programs that simulate musical intelligence (such as those from pioneers Joel Chadabe and George Lewis). My programs don’t make musical decisions like a live player would. They depend on the performer's instincts and abilities, amplifying and twisting their gestures.

I find that simple transformation are enough. I like keeping the responses of the instruments just predictable enough so that the player understands what is going on and has a sense of control, but finds some novelty and surprise, making it fun to play. Usually I design them for my own interests as a keyboard player but often times I work with another player, and sometimes create a network for two or three people. One of my favorite situations was playing on a Yamaha MIDI grand (acoustic piano with MIDI output), running its output through a transformation, and sending the altered performance to a Disklavier (computer-controlled acoustic piano) and synthesizers. I’ve collaborated with a variety of instrumentalists, creating microworlds for violin, clarinet, saxophone, guitar, recorder, Buchla Lightning, and keyboard.

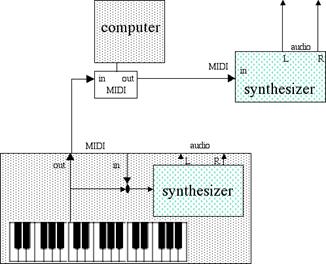

This diagram

shows inserting a computer between a controller and synthesizer:

Figure 1: MIDI data comes out the keyboard, goes through the computer

and is processed, after which it goes on to a synthesizer module to be listened to.

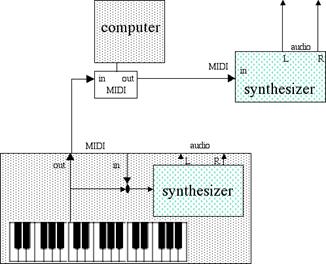

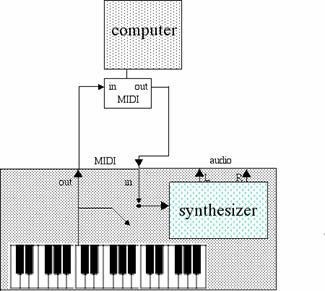

The same functionality could be obtained without an additional module by turning MIDI local control off [thereby breaking the connection between the keyboard and internal synthesizer], going out and through the computer, and returning to the instrument’s MIDI in:

Figure 2: Local control has been turned off. MIDI data goes out, goes through the computer and is processed, and comes back in to the keyboard to be played on the internal synthesizer. If local control was not turned off you would hear both the normal response and the processed performance.

In general the output from the keyboard goes into the computer running the Max which processes the performance in some way and sends commands to the synthesizer(s). [Turning local control off allows me to route the modified information back into the synth rather than setting up an additional device.]

These transforming programs are written in such a way that they are operated from the controller with keys, buttons, and pedals rather than from the computer with the ASCII keyboard and mouse. I know logically that it is the computer that is changing the performance, but since its parameters are controlled from the keyboard I slip into the sensation while playing that the keyboard itself has been changed, hence the name “programmable instruments”.

Max comes with a lot of objects that can be easily combined and configured on the screen. One of the objects is the notein box. Three outlets appear automatically on its bottom, in order from right to left they represent an incoming note’s channel, velocity, and key number. When you press a key three numbers come out. These can be easily monitored by connecting the outlets of the notein to the inlets of three number boxes. To see what is being received by notein you connect it to the number display boxes by clicking and dragging from each outlet to an input of one of the number boxes. If you try it you’ll see the velocity with which the key is played appear instantly in the leftmost box. [MIDI trivia: Releasing a key will display a zero for velocity. Most synthesizers turn off a note by sending out a note on command with a velocity of 0.

Figure 3: notein connected to number boxes

The 0’s will change to numbers as soon as you play something on the keyboard

This could be expanded to create a MIDI monitor to help debug a setup, showing what information the computer is receiving from a controller. Another Max object, noteout, has three inputs on the top, that correspond to notein’s outlets. After connecting a notein to a noteout the information will flow from one down to the other. The noteout object sends the note command out the MIDI interface to any instrument that is hooked up.

Figure 4: notein connected to noteout. No processing takes place yet

If I had this patch running the synthesizer would seem to respond in the normal way to the controller. Now the fun begins. Any sort of mathematical or other process can be used to change the instrument’s response. Instead of passing the three pieces of data to the output I can change any or all of them. Adding 12 to the key number will make the notes sound up an octave. If I have the part of the patch on the right side passing notes normally and the part on the left transposing them up an octave, everything that is played on the controller will come out in octaves on the synthesizer.

Figure 5: controller performance comes out in octaves

The number

that is added to the key number doesn’t have to be fixed at 12. It could come

from a number box that could be clicked on and typed into from the ASCII

keyboard, or it could come from the controller—that way I don’t have to move my

hands away from the keyboard. Here I’ve given the user the option of typing in

a value, or setting it with the mod wheel. Be careful not to be holding a note

on the keyboard when you change the value or you’ll get a stuck note-- the

noteout object will get the wrong key number since the key was shifted by a

different value when the key is released.. Max patches have to be debugged just

like other programs in order to find such logical errors. In case a note does

get stuck, a sub-patch in the upper left hand corner has been made that sends

out a “bang” (a Max-ism meaning “do it!”) when the <escape> key is pressed on

the ASCII keyboard. The bang goes into another sub-patch that sends out all

notes off on all channels.

Figure 6: Notes are sent out two MIDI channels, so that you can have different timbres and volume levels for the original and shifted notes

It’s time to make the response more disorienting. In the next patch the output is inverted: the higher you play on the keyboard, the lower the pitches come out. While you still have control over dynamics and timing, you’ll go into different keys as you play.

Figure 7: Notes are inverted. The higher you play on the keyboard, lower the notes sound. The 0’s in the boxes down the left side will change when you play notes showing how the key number is changed after subtracting 127 and then taking the absolute value.

MIDI key numbers are between 0 and 128, exceeding the range of a piano’s 88 keys. The inversion is accomplished by subtracting the incoming key number from 127. If I play a middle C the MIDI key number 60 comes out of the leftmost outlet from the notein object. This number travels down the patch chord I’ve drawn in, is subtracted from 127, and finally sent on to the noteout object, which sends a key number 67 (G above middle C) out the MIDI interface. If I then play a D a whole step above (key 62) I’ll hear an F (127 - 62 = 65). Pressing an E (key 64) next will cause an Eb to be heard (127 – 64 = 63), and so on. I’ve been playing an ascending C major scale but am hearing a descending G phrygian mode instead. If I’m open to playing something other than my standard repertoire this is going to get me out of my rut.

As a

programmer it’s a good idea to start out with a simple idea and get it working,

and then gradually add in features, testing as you go. These patches are on the

website. For each

example I’ve started with the simplest transformation. There are other examples

online that start with the basic idea and add on other controls to make them

more musically interesting. For example, once I get the basic inversion going

on I think I’d like to be able to hear both the normal response and the inverted

one, and then add a controller to fade the inverted notes in and out. Now as

the volume pedal or modulation wheel are turned up you hear more of the inverted

notes, which need to go out on a different MIDI channel. Here’s a second

version of “inverter”:

Figure 8: Refracted notes go out on a separate channel, allowing independent volume control from either the volume pedal or modulation wheel.

If you go to the website you’ll see more options, like having program changes to set up the sound, and pan controls so that the two sounds can come out of different positions. I’d like to have the center point of the inversion change--maybe someday I’ll get around to inverting it around the first key in each phrase, defining a phrase as something that starts after a half a second of silence. I could disconnect the mod wheel from the control over the volume of the inverted notes and have it go out to control the volume of the channel of the notes in the upper right hand corner (the ones I’m playing that are unchanged and passed straight through).

The pitches in the next example are less controllable for the performer. Now the velocity with which the key is pressed becomes the key number of the output key. The harder you play a key, the higher (and louder) the note is heard. [See website for MIDI file examples]. I call this patch “refraction”:

Figure 9: The harder you play, the lower pitch and longer duration the notes are that come out. The result will be atonal.

A variation of this would be to have the key number control the velocity of the output note, while leaving the velocity of the input note to control the key number of the output. Playing softer on the keyboard would then make the notes come out lower. Player higher on the keyboard would make the output louder. That would definitely take some getting used to!

I tend to make design choices that leave the performer in command of timing and dynamics and engage the computer in contributing to the choice of pitch and timbre. Maybe it’s the result of growing up as a piano player, which is a percussion instrument. We don’t have to worry about playing in tune and don’t have as much control over tone as other instrument families. When I get behind the wheel of these systems I feel my primary need is to decide when something happens and how big it is. I don’t get the same physical satisfaction from causing a burst of notes to come out by dragging a mouse as I do from pushing down hard on a lot of keys. I think we know more than we can say with words or explain in an algorithm. The physicality in the effort required to get louder seems to keep me in more direct contact with my subconscious. In the examples here nothing happens unless a key is pressed or released. This makes me feel more involved as a performer, and I think it makes it easier for an audience to see that there is a connection, even if they don’t understand exactly what is going on.

In Max you can create your sub-patches and give them names of your choosing. Breaking a process down into a number of sub-patches, each with its own function, helps keep your programs more structured and easier to debug. You can also give them names that remind you what they do. Here is a patch Christopher Dobrian and I made for a duo piece (2-way Dream). We developed our sub-patches independently between sessions and then loaded them into one computer when we got together.

Figure 10: Each performer’s patch sends and receives information from each other. Chris played on a MIDI guitar and I played keyboard in a piece called 2-way Dream.

The

transformation can become as complicated as you wish. I have a patch called

cereal

that plays

serial music no matter what I play on the keyboard music, something that players

would have a hard time doing unaided.. The computer stores a series of notes

(in advance or on the fly)--it could be a 12-tone row (for serial music), or a

sequence of another pitch collection of any length. For example, if I have

recorded the series of notes C, E, F, G, Bb then each time a note is played on

the keyboard the sequence will be stepped through one by one. For example, if

I’ve just started and play a low F, then a low C will be played. Playing a high

F will then cause a high E to come out and so on. The player controls the

register, timing, and dynamics of the triggered notes (something fun to do),

while the computer keeps track of the pitches (hard to do in the case of serial

music [See website for MIDI file examples].

Figure 11: Cereal Music – incoming notes

trigger a 12-tone row, which can be inverted or played in retrograde using

pedals assigned to controller numbers 68 and 69. In the upper right hand

corner is the preset object. Each preset recalls any parameters of your

choosing. Presets are recalled by clicking in the little boxes, or, in

this patch, by stepping on a pedal (#68) so that a pre-arranged series can be

conveniently accessed without leaving the keyboard. Many of the connecting

lines have been hidden.

Figure 11: Cereal Music – incoming notes

trigger a 12-tone row, which can be inverted or played in retrograde using

pedals assigned to controller numbers 68 and 69. In the upper right hand

corner is the preset object. Each preset recalls any parameters of your

choosing. Presets are recalled by clicking in the little boxes, or, in

this patch, by stepping on a pedal (#68) so that a pre-arranged series can be

conveniently accessed without leaving the keyboard. Many of the connecting

lines have been hidden.

Another patch, aleatoria, starts a series of random notes as soon as a performed note is ended. The range of pitches within which the computer picks notes is set in a one of any number of presets that are then stepped through during a performance. Other parameters can be combined in the presets, such as the channels/timbres, pan positions, volumes, reverb levels, and so forth. [See website for MIDI file examples] In the old days (i.e. with the Yamaha DX7 synthesizers or KX-76 controllers) when keyboards had a small number of possible timbres there were buttons on the instrument that sent out program changes. These are handy for getting random access to the states of the program since the patch can be designed to respond to incoming program change commands by recalling the corresponding preset, changing the parameters of the environment. Designing a new response for the instrument and then setting up combinations of timbres, locations, volumes, etc. is precompositional activity. The patch starts responding better to certain types of input and becomes a deserves a new category of composition itself.

I’ve just gotten access to Max/MSP (which adds audio analysis and processing to what was formerly limited to MIDI) and Jitter (Cycling 74’s companion program which adds video processing tools). Over the years we’ve become accustomed to finding word processing and music production tools on the same desktop. A few years ago we may have felt the need to get involved with graphics and tried to keep up with the opportunities offered by programs like Photoshop and Flash. Now we better get busy with video! If you like Max you will want to get Jitter, which opens up opportunities to process and perform images. The three programs Max/MSP, Jitter, and Pluggo sell together for about about $1000 and provide an integrated environment for multimedia software development.

For my masters degree project in computer music at the University of California San Diego I used “characteristic depending panning”, with sounds’ own inherent qualities, such as volume, pitch, and beat position controlling movement in space. I’m looking forward to getting back to this approach and extending it with graphics and surround sound in DVD productions. Another piece of good news is that patches that I come with in Max can now be turned into stand alone programs and plugins for widely available platforms like Pro Tools and Digital Performer.

One advertising phrase that’s become overused and annoying for me is “for children of all ages” and its variations like “for the young and the young at heart”. The phrase “the only limit is your imagination” has been similarly overused in descriptions of creative tools. It should have been saved for cases like Max. Software tools like Max and Jitter let us more quickly implement and test the intuitions of our minds. What if the loudness of the music controlled the red-ness of the image on the screen, the speed of notes the blue-ness, and the pitch class complexity the amount of green? Let’s see…where shall we get the pixels’ transparency from? The sky is definitely not the limit.

Robert Willey teaches music media and theory at the University of Louisiana at Lafayette. He is currently engaged in experimental multimedia and surround sound projects. For more information visit http://willshare.com/robert.